Ben Dali

Cloud Solutions Architect

Professional Projects at Work

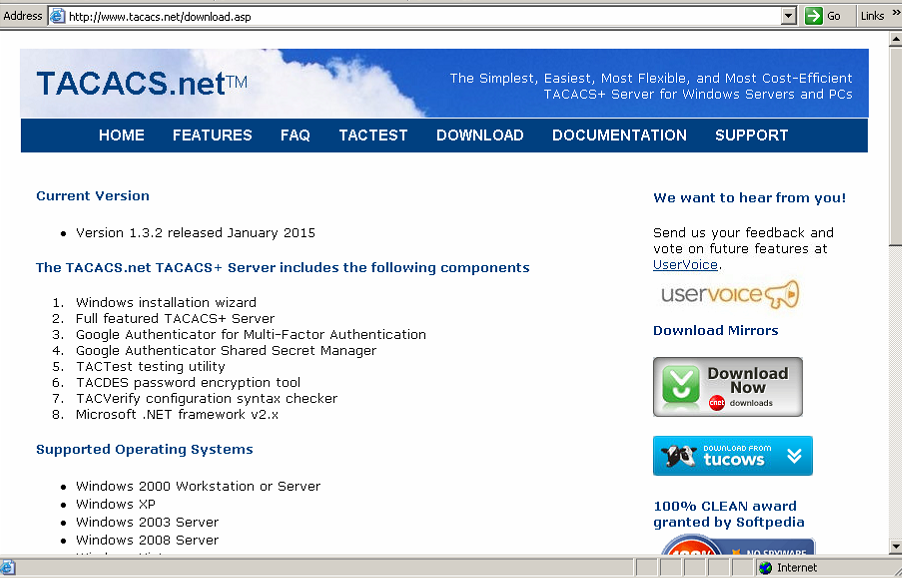

TACACS Server Implementation

Implementing TACACS server on a network infrastructure combined with Cisco Catalyst and Nexus Family Devices (N4K, N5K, N7K, MDS, etc…) integrated with Active directory based authentication. The authorization rules are based on an authorization matrix divided to groups that contain administrators, operators, and read only users that are taken from the Active Directory Database thru an LDAP query.

This service provide rule based access to users across domains and tiers and single point of management without the need to create users and passwords on devices, maintain passwords and supplies AAA (Authentication, Authorization, Accounting) in order to track changes made by users on devices.

LDAP

Active Directory

RBAC RBAC

Cisco

Security

XML

Encryption

Centralized-authenitcation

Management

Network

LDAP

Active Directory

RBAC RBAC

Cisco

Security

XML

Encryption

Centralized-authenitcation

Management

Network

VMware NSX Deployment

VMware NSX is an SDN technology that provides many benefits to an organization specially when using a large development line that requires fast deployments, self-service and day to automated day changes in large scales.

The project included:

- Installing the required VMware infrastructure for NSX (Management cluster, Payload cluster, etc...)

- Configuring the network in order to support VXLAN.

- Installing Controllers, NSX Manager, deploying Edges, DLRs, PLRs, and required infrastructure to support NSX infra.

- Creating required objects including Security Groups, tags, policies.

- Configuring NSX Load Balancers (on Edges).

- Maintaining the system and performing day to day activities.

- Upgrading environment.

- Troubleshooting.

More infromation about NSX can be found here

SDN Network VMware VMware NSX Data Center Overlay Network VirtualizationPrivate Cloud (VMware) Data Center rellocation

Planning and performing relocation of a Data Center that contains more than 5000 servers that provide a platform for a developers base lines.

Project tasks (High-Level):

- Planning hardware movements.

- Scheduling time lines with the entire organization, vendors and suppliers.

- Scheduling down time with more than 2000 users.

- Validating backups.

- Creating pre-configuration files for network architecture changes needed following the movement plan.

- Planning more activities while using the down time for performing actions on critical components:

- Upgrading the network backbones to a newer and stable version (Cisco Nexus 7K backbones).

- Upgrading the network low-latency clusters (Cisco N5Ks switches).

- Upgrading the Storage system while replacing part of them for better performance (Flash arrays).

- Upgrading the Infiniband switches to new models (Mellanox).

- Upgrading the storage cluster versions (EMC Isilon).

- Changing connections to new FC switches.

- Performing shutdowns to servers and services according to their roles and core priority.

- Troubleshooting.

- Performing test before production.

- Bringing the system back to production.

Data Center

Network

Storage

Infrastructure

VMware

EMC

ISILON

Infiniband

Cisco

Fiber Channel Switches

Design Planning

Data Center

Network

Storage

Infrastructure

VMware

EMC

ISILON

Infiniband

Cisco

Fiber Channel Switches

Design Planning

Hadoop Infrastructure Project

Design and Deploy of 6 Environments of Hadoop Clusters.

The Design included:

- Environments Redundancy, Rack Awareness, Cluster data replication, Monitoring solution

- Full Network Architecture (Including Low-Latency concerns & Load Balancing [F5] needs)

- Security Architecture (Firewalls, WAFs, )

- Fast deployment using Kickstart and Puppet servers.

- Working in a continues integrations mode using Agile Methodologies.

- Deployment of Core components (DNS, DHCP, Security Mechanisms )in a virtual environment.

- Upgrading the systems.

- providing 3 lines of developments (Performance, staging and Production).

Hadoop Technology is based on Linux Servers that supplies one big resource. it is based on an open-source software framework for distributed storage and distributed processing of very large data sets on computer clusters built from commodity hardware.

All the modules in Hadoop are designed with a fundamental assumption that hardware failures are common and should be automatically handled by the framework. The core of Apache Hadoop consists of a storage part, known as Hadoop Distributed File System (HDFS), and a processing part called MapReduce. Hadoop splits files into large blocks and distributes them across nodes in a cluster.

You can read more about Hadoop Project by following this link

Hadoop Big Data Network Infrastructure HDFS Kickstart Load BalancingNagios (Open Source monitoring system) installation and customization

Design and Deploy of a Nagios Monitoring system:

- Monitoring corporate infrastructure components (Network Switches, Routers, Firewalls, Wireless Systems, Physical & Servers etc...).

- Customizing the system according to different SLA's, Device Types, deployments, alerting groups, OS's, and more variables.

- maintaining the system on a daily basis.

- performing upgrades from time to time.

- deploying distributed system monitoring.

for more infromation about Nagios following this link

Nagios Open Source Linux Customizations